GENERIC SUPPORT FOR PARSING INTO XDATA

.. Generically parse FIT file messages into XDATA. The current

implementation does this for session, lap and totals messages

but could very easily be extended to any other message type

.. Generic parsing uses metadata rather than hard coding the

message and field types and so on

.. The FIT metadata (FITmetadata.json) has been expanded to

include definitions of message types and all the standard

fields within the message types

.. The existing hard-coded parsing remains to extract data

and apply directly to ridefile samples and metadata. The

generic parser simply adds additional tabs on the data

view as XDATA so users can access it.

CODE REFACTORING, COMMENTS AND BUG FIXES

.. At some point the code needs to be refactored as it is

janky and needs to align with the rest of the codebase

.. Includes a mild refactor renaming some of the classes/structs

and variables to reflect what they actually are, for example:

FitFileReadState -> FitFileParser

FitDefinition -> FitMessage

.. Added lots of code comments and re-organised the code

into clear sections to help navigate what is a very

cumbersome source file, this breaks git blame history

but is worth the loss (you can checkout an earlier commit

to do a full blame)

.. Changed debugging levels to be more helpful

.. Generally I did not change any code, but there were a

couple of serious bugs that needed to be corrected:

Field definitions gets the type wrong in a couple of

places since the type is stored in the low 4 bits:

type = value & 0x1F

The decodeDeveloperFieldDescription function did not

check for NA_VALUEs for scale, offset, native field

.. For less serious bugs I added FIXME comments throughout the code

Fixes #4416

GoldenCheetah

About

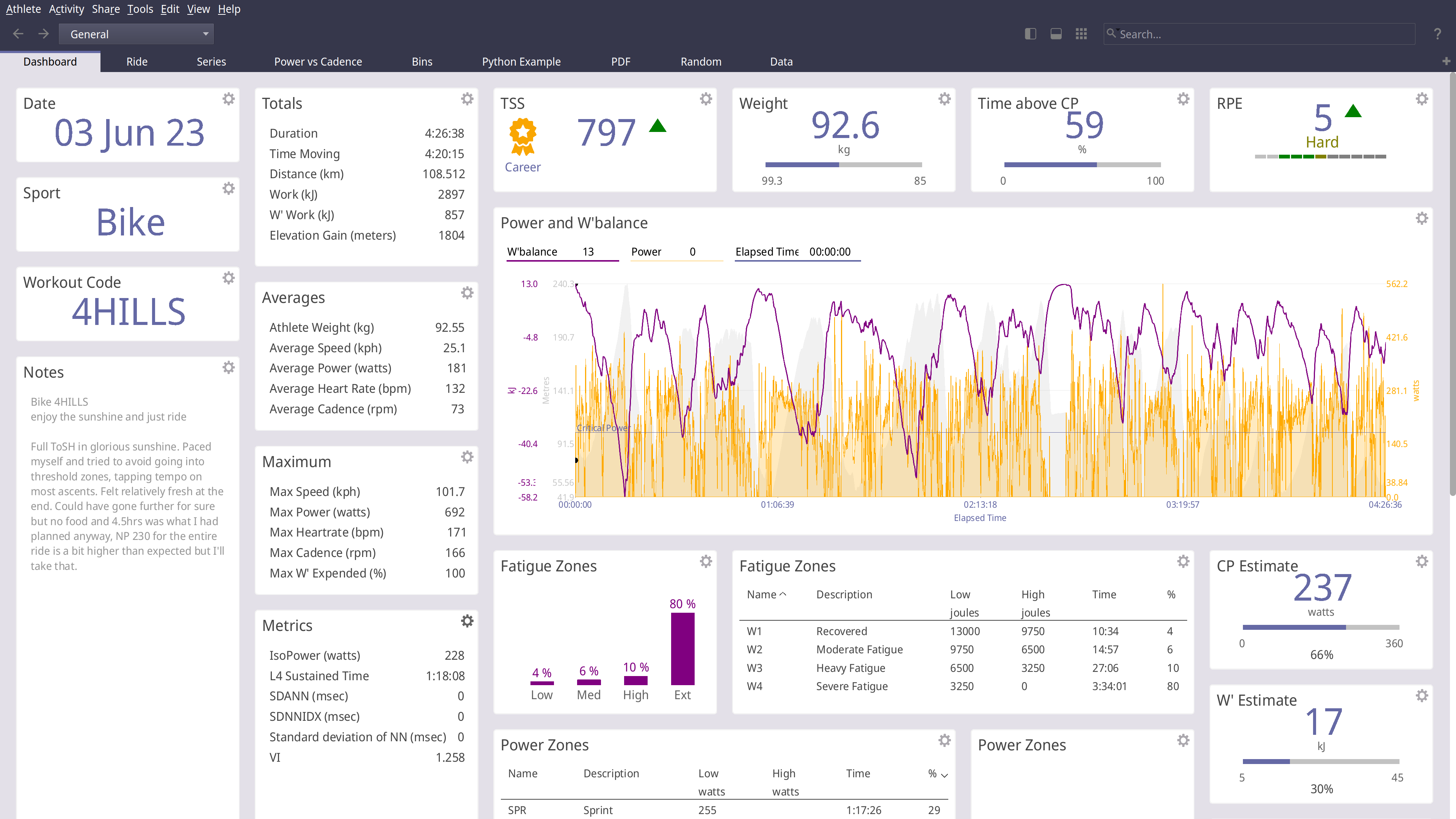

GoldenCheetah is a desktop application for cyclists and triathletes and coaches

- Analyse using summary metrics like BikeStress, TRIMP or RPE

- Extract insight via models like Critical Power and W'bal

- Track and predict performance using models like Banister and PMC

- Optimise aerodynamics using Virtual Elevation

- Train indoors with ANT and BTLE trainers

- Upload and Download with many cloud services including Strava, Withings and Todays Plan

- Import and export data to and from a wide range of bike computers and file formats

- Track body measures, equipment use and setup your own metadata to track

GoldenCheetah provides tools for users to develop their own own metrics, models and charts

- A high-performance and powerful built-in scripting language

- Local Python runtime or embedding a user installed runtime

- Embedded user installed R runtime

GoldenCheetah supports community sharing via the Cloud

- Upload and download user developed metrics

- Upload and download user, Python or R charts

- Import indoor workouts from the ErgDB

- Share anonymised data with researchers via the OpenData initiative

GoldenCheetah is free for everyone to use and modify, released under the GPL v2 open source license with pre-built binaries for Mac, Windows and Linux.

Installing

Golden Cheetah install and build instructions are documented for each platform;

INSTALL-WIN32 For building on Microsoft Windows

INSTALL-LINUX For building on Linux

INSTALL-MAC For building on Apple MacOS

Official release builds, snapshots and development builds are all available from http://www.goldencheetah.org

NOTIO Fork

If you are looking for the NOTIO fork of GoldenCheetah it can be found here: https://github.com/notio-technologies/GCNotio